Generative AI and significantly LLMs (Giant Language Fashions) have exploded

into the general public consciousness. Like many software program builders I’m intrigued

by the probabilities, however not sure what precisely it’ll imply for our occupation

in the long term. I’ve now taken on a job in Thoughtworks to coordinate our

work on how this know-how will have an effect on software program supply practices.

I will be posting varied memos right here to explain what my colleagues and I are

studying and considering.

Newest Memo: The function of developer expertise in agentic coding

25 March 2025

As agentic coding assistants develop into extra succesful, reactions fluctuate broadly. Some extrapolate from current developments and declare, “In a 12 months, we gained’t want builders anymore.” Others increase issues concerning the high quality of AI-generated code and the challenges of making ready junior builders for this altering panorama.

Up to now few months, I’ve usually used the agentic modes in Cursor, Windsurf and Cline, virtually completely for altering present codebases (versus creating Tic Tac Toe from scratch). I’m total very impressed by the current progress in IDE integration and the way these integrations massively increase the way in which wherein the instruments can help me. They

- execute assessments and different growth duties, and attempt to instantly repair the errors that happen

- robotically choose up on and attempt to repair linting and compile errors

- can do net analysis

- some even have browser preview integration, to choose up on console errors or examine DOM components

All of this has led to spectacular collaboration periods with AI, and typically helps me construct options and work out issues in document time.

Nevertheless.

Even in these profitable periods, I intervened, corrected and steered on a regular basis. And infrequently I made a decision to not even commit the modifications. On this memo, I’ll record concrete examples of that steering, as an instance what function the expertise and expertise of a developer play on this “supervised agent” mode. These examples present that whereas the developments have been spectacular, we’re nonetheless distant from AI writing code autonomously for non-trivial duties. In addition they give concepts of the sorts of expertise that builders will nonetheless have to use for the foreseeable future. These are the talents we have now to protect and prepare for.

The place I’ve needed to steer

I need to preface this by saying that AI instruments are categorically and all the time dangerous on the issues that I’m itemizing. A few of the examples may even be simply mitigated with extra prompting or customized guidelines. Mitigated, however not absolutely managed: LLMs steadily don’t take heed to the letter of the immediate. The longer a coding session will get, the extra hit-and-miss it turns into. So the issues I’m itemizing completely have a non-negligible likelihood of taking place, whatever the rigor in prompting, or the variety of context suppliers built-in into the coding assistant.

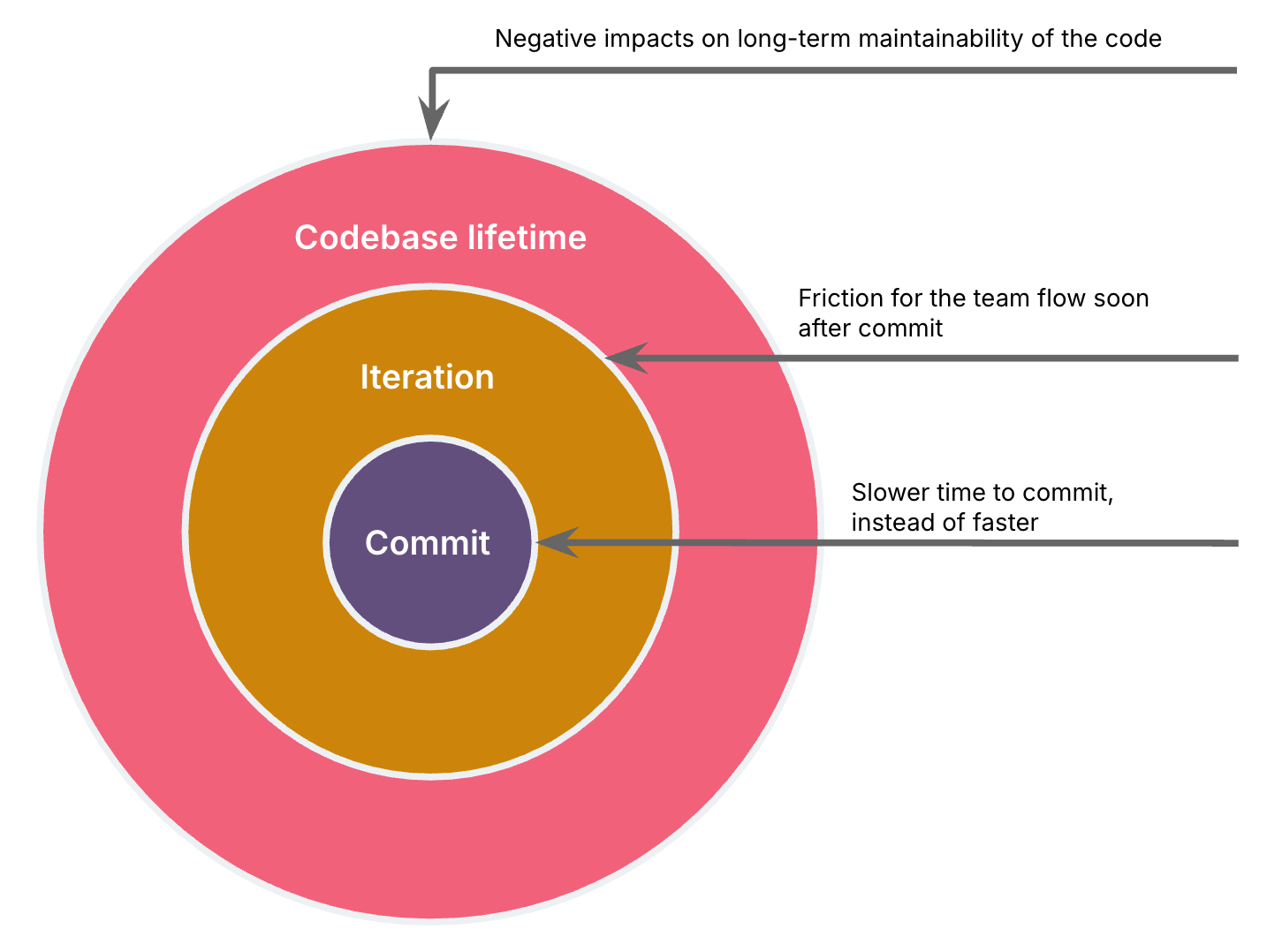

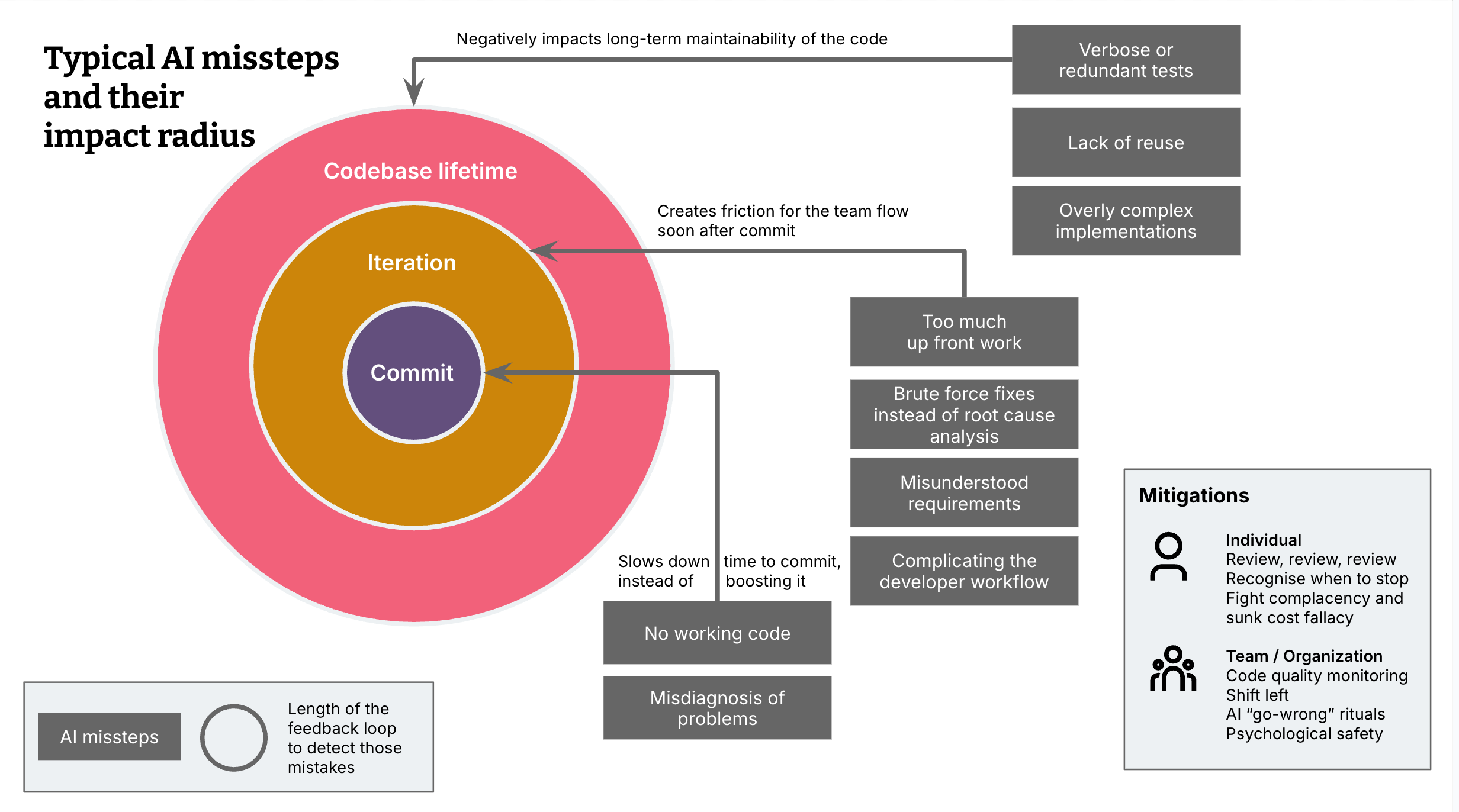

I’m categorising my examples into 3 sorts of impression radius, AI missteps that:

a. slowed down my velocity of growth and time to commit as an alternative of rushing it up (in comparison with unassisted coding), or

b. create friction for the group movement in that iteration, or

c. negatively impression long-term maintainability of the code.

The larger the impression radius, the longer the suggestions loop for a group to catch these points.

Affect radius: Time to commit

These are the instances the place AI hindered me greater than it helped. That is really the least problematic impression radius, as a result of it’s the obvious failure mode, and the modifications most likely is not going to even make it right into a commit.

No working code

At instances my intervention was essential to make the code work, plain and easy. So my expertise both got here into play as a result of I might rapidly right the place it went flawed, or as a result of I knew early when to surrender, and both begin a brand new session with AI or work on the issue myself.

Misdiagnosis of issues

AI goes down rabbit holes fairly steadily when it misdiagnoses an issue. A lot of these instances I can pull the instrument again from the sting of these rabbit holes based mostly on my earlier expertise with these issues.

Instance: It assumed a Docker construct problem was because of structure settings for that Docker construct and altered these settings based mostly on that assumption — when in actuality, the problem stemmed from copying node_modules constructed for the flawed structure. As that could be a typical drawback I’ve come throughout many instances, I might rapidly catch it and redirect.

Affect radius: Crew movement within the iteration

This class is about instances the place an absence of evaluate and intervention results in friction on the group throughout that supply iteration. My expertise of engaged on many supply groups helps me right these earlier than committing, as I’ve run into these second order results many instances. I think about that even with AI, new builders will study this by falling into these pitfalls and studying from them, the identical means I did. The query is that if the elevated coding throughput with AI exacerbates this to a degree the place a group can not take up this sustainably.

An excessive amount of up-front work

AI usually goes broad as an alternative of incrementally implementing working slices of performance. This dangers losing massive upfront work earlier than realizing a know-how selection isn’t viable, or a purposeful requirement was misunderstood.

Instance: Throughout a frontend tech stack migration activity, it tried changing all UI parts directly fairly than beginning with one element and a vertical slice that integrates with the backend.

Brute-force fixes as an alternative of root trigger evaluation

AI typically took brute-force approaches to unravel points fairly than diagnosing what really brought on them. This delays the underlying drawback to a later stage, and to different group members who then must analyse with out the context of the unique change.

Instance: When encountering a reminiscence error throughout a Docker construct, it elevated the reminiscence settings fairly than questioning why a lot reminiscence was used within the first place.

Complicating the developer workflow

In a single case, AI generated construct workflows that create a nasty developer expertise. Pushing these modifications virtually instantly would have an effect on different group members’ growth workflows.

Instance: Introducing two instructions to run an utility’s frontend and backend, as an alternative of 1.

Instance: Failing to make sure sizzling reload works.

Instance: Difficult construct setups that confused each me and the AI itself.

Instance: Dealing with errors in Docker builds with out contemplating how these errors could possibly be caught earlier within the construct course of.

Misunderstood or incomplete necessities

Typically once I don’t give an in depth description of the purposeful necessities, AI jumps to the flawed conclusions. Catching this and redirecting the agent doesn’t essentially want particular growth expertise, simply consideration. Nevertheless, it occurred to me steadily, and is an instance of how absolutely autonomous brokers can fail after they don’t have a developer watching them work and intervening at first, fairly than on the finish. In both case, be it the developer who doesn’t assume alongside, or an agent who’s absolutely autonomous, this misunderstanding shall be caught later within the story lifecycle, and it’ll trigger a bunch of backwards and forwards to right the work.

Affect radius: Lengthy-term maintainability

That is probably the most insidious impression radius as a result of it has the longest suggestions loop, these points may solely be caught weeks and months later. These are the sorts of instances the place the code will work nice for now, however shall be more durable to vary sooner or later. Sadly, it’s additionally the class the place my 20+ years of programming expertise mattered probably the most.

Verbose and redundant assessments

Whereas AI will be improbable at producing assessments, I steadily discover that it creates new take a look at capabilities as an alternative of including assertions to present ones, or that it provides too many assertions, i.e. some that have been already coated in different assessments. Counterintuitively for much less skilled programmers, extra assessments will not be essentially higher. The extra assessments and assertions get duplicated, the more durable they’re to take care of, and the extra brittle the assessments get. This will result in a state the place at any time when a developer modifications a part of the code, a number of assessments fail, resulting in extra overhead and frustration. I’ve tried to mitigate this behaviour with customized directions, but it surely nonetheless occurs steadily.

Lack of reuse

AI-generated code typically lacks modularity, making it tough to use the identical method elsewhere within the utility.

Instance: Not realising {that a} UI element is already carried out elsewhere, and subsequently creating duplicate code.

Instance: Use of inline CSS kinds as an alternative of CSS courses and variables

Overly advanced or verbose code

Typically AI generates an excessive amount of code, requiring me to take away pointless components manually. This will both be code that’s technically pointless and makes the code extra advanced, which can result in issues when altering the code sooner or later. Or it may be extra performance than I really want at that second, which may enhance upkeep price for pointless strains of code.

Instance: Each time AI does CSS modifications for me, I then go and take away typically large quantities of redundant CSS kinds, one after the other.

Instance: AI generated a brand new net element that would dynamically show information within a JSON object, and it constructed a really elaborate model that was not wanted at that time limit.

Instance: Throughout refactoring, it failed to acknowledge the present dependency injection chain and launched pointless extra parameters, making the design extra brittle and more durable to grasp. E.g., it launched a brand new parameter to a service constructor that was pointless, as a result of the dependency that offered the worth was already injected. (worth = service_a.get_value(); ServiceB(service_a, worth=worth))

Conclusions

These experiences imply that by no stretch of my private creativeness will we have now AI that writes 90% of our code autonomously in a 12 months. Will it help in writing 90% of the code? Perhaps. For some groups, and a few codebases. It assists me in 80% of the instances right now (in a reasonably advanced, comparatively small 15K LOC codebase).

What are you able to do to safeguard in opposition to AI missteps?

So how do you safeguard your software program and group in opposition to the capriciousness of LLM-backed instruments, to make the most of the advantages of AI coding assistants?

Particular person coder

-

At all times rigorously evaluate AI-generated code. It’s very uncommon that I do NOT discover one thing to repair or enhance.

-

Cease AI coding periods whenever you really feel overwhelmed by what’s occurring. Both revise your immediate and begin a brand new session, or fall again to handbook implementation – “artisanal coding”, as my colleague Steve Upton calls it.

-

Keep cautious of “adequate” options that have been miraculously created in a really brief period of time, however introduce long-term upkeep prices.

-

Follow pair programming. 4 eyes catch greater than two, and two brains are much less complacent than one

Crew and group

-

Good ol’ code high quality monitoring. In case you don’t have them already, arrange instruments like Sonarqube or Codescene to provide you with a warning about code smells. Whereas they’ll’t catch all the pieces, it’s a superb constructing block of your security web. Some code smells develop into extra outstanding with AI instruments and must be extra intently monitored than earlier than, e.g. code duplication.

-

Pre-commit hooks and IDE-integrated code evaluate. Bear in mind to shift-left as a lot as potential – there are lots of instruments that evaluate, lint and security-check your code throughout a pull request, or within the pipeline. However the extra you’ll be able to catch immediately throughout growth, the higher.

-

Revisit good code high quality practices. In mild of the sorts of the pitfalls described right here, and different pitfalls a group experiences, create rituals that reiterate practices to mitigate the outer two impression radiuses. For instance, you may hold a “Go-wrong” log of occasions the place AI-generated code led to friction on the group, or affected maintainability, and mirror on them as soon as per week.

-

Make use of customized guidelines. Most coding assistants now assist the configuration of rule units or directions that shall be despatched together with each immediate. You can also make use of these as a group to iterate on a baseline of immediate directions to codify your good practices and mitigate a number of the missteps listed right here. Nevertheless, as talked about at first, it’s under no circumstances assured that the AI will comply with them. The bigger a session and subsequently a context window will get, the extra hit or miss it turns into.

-

A tradition of belief and open communication. We’re in a transition section the place this know-how is severely disrupting our methods of working, and all people is a newbie and learner. Groups and organizations with a trustful tradition and open communication are higher outfitted to study and take care of the vulnerability this creates. For instance, a corporation that places excessive strain on their groups to ship quicker “since you now have AI” is extra uncovered to the standard dangers talked about right here, as a result of builders may minimize corners to meet the expectations. And builders on groups with excessive belief and psychological security will discover it simpler to share their challenges with AI adoption, and assist the group study quicker to get probably the most out of the instruments.

Because of Jim Gumbley, Karl Brown, Jörn Dinkla, Matteo Vaccari and Sarah Taraporewalla for his or her suggestions and enter.