Think about a safety operations middle (SOC) that displays community and end-point information in actual time to determine threats to their enterprise. Relying on the scale of its group, a SOC might obtain about 200,000 alerts per day. Solely a small portion of those alerts can obtain human consideration as a result of every investigated alert might require 15 to twenty minutes of analyst consideration to reply a important query for the enterprise: Is that this a benign occasion, or is my group below assault? This can be a problem for practically all organizations, since even small enterprises generate much more community and endpoint alerts and log occasions than people can successfully monitor. SOCs subsequently should make use of safety monitoring software program to pre-screen and down pattern the variety of logged occasions requiring human investigation.

Machine studying (ML) for cybersecurity has been researched extensively as a result of SOC actions are information wealthy, and ML is now more and more deployed into safety software program, although ML will not be but broadly trusted within the SOC. A significant barrier is that ML strategies endure from a scarcity of explainability. With out explanations, it’s cheap for SOC analysts to not belief the ML.

Exterior of cybersecurity, there are broad normal calls for for ML explainability. The European Common Information Safety Regulation (Article 22 and Recital 71) encodes into legislation the “proper to an evidence” when ML is utilized in a method that considerably impacts a person. The SOC analyst additionally has a necessity for explanations as a result of the choices they have to make, usually below time strain and ambiguous info, can considerably impacts on their group.

We suggest cyber-informed machine studying as a conceptual framework for emphasizing three sorts of explainability when ML is used for cybersecurity:

- data-to-human

- model-to-human

- human-to-model

On this weblog publish, we offer an outline of every sort of explainability, and we suggest analysis wanted to realize the extent of explainability essential to encourage use of ML-based methods supposed to assist cybersecurity operations.

Information-to-Human Explainability

Information-to-human explainability seeks to reply: What’s my information telling me? It’s the most mature type of explainability, and it’s a major motivation of statistics, information science, and associated fields. Within the SOC, a fundamental use case is to grasp the conventional community site visitors profile, and a extra particular use case is likely to be to grasp the historical past of a specific inside web protocol (IP) handle interacting with a specific exterior IP handle.

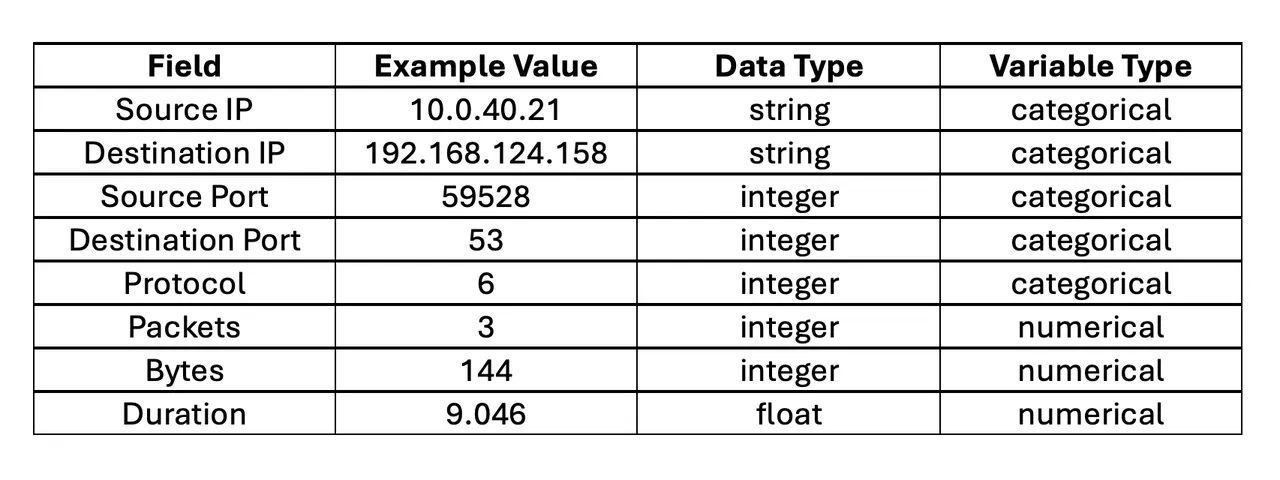

Whereas such a explainability could seem easy, there are a number of cybersecurity-specific challenges. For instance, contemplate the NetFlow fields recognized in Desk 1.

Desk 1: NetFlow Instance Fields

ML strategies can readily be utilized to the numerical fields: packets, bytes, and length. Nonetheless, supply IP and vacation spot IP are strings, and within the context of ML they’re categorical variables. A variable is categorical if its vary of potential values is a set of ranges (classes). Whereas supply port, vacation spot port, protocol, and sort are represented as integers, they’re truly categorical variables. Moreover, they’re non-ordinal as a result of their ranges don’t have any sense of order or scale (e.g., port 59528 will not be one way or the other subsequent to or bigger than port 53).

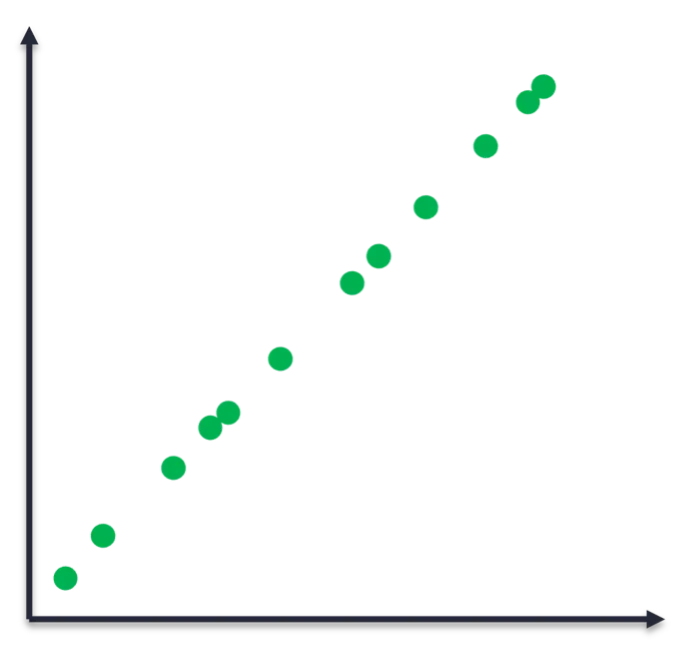

Think about the information factors in Determine 1 to grasp why the excellence between numerical and categorical variables is essential. The underlying perform that generated the information is clearly linear. We are able to subsequently match a linear mannequin and use it to foretell future factors. Enter variables which are non-ordinal categorical (e.g., IP handle, ports, and protocols) problem ML as a result of there isn’t any sense of order or scale to leverage. These challenges usually restrict us to fundamental statistics and threshold alerts in SOC purposes.

Determine 1: Empirically noticed information factors that have been generated by an underlying linear perform

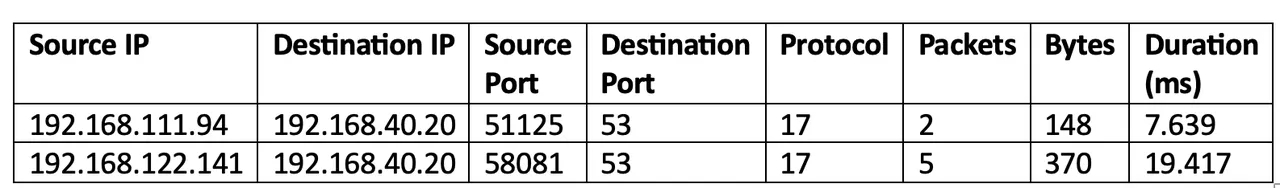

A associated problem is that cyber information usually have a weak notation of distance. For instance, how would we quantify the gap between the 2 NetFlow logs in Desk 2? For the numerical variable movement length, the gap between the 2 logs is nineteen.417 – 7.639 = 11.787 milliseconds. Nonetheless, there isn’t any related notion of distance between the 2 ephemeral ports, in addition to the opposite categorical variables.

Desk 2: Instance of two NetFlow logs

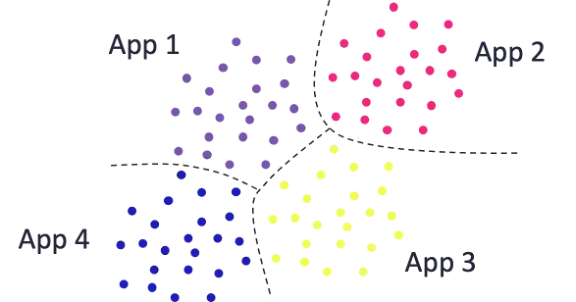

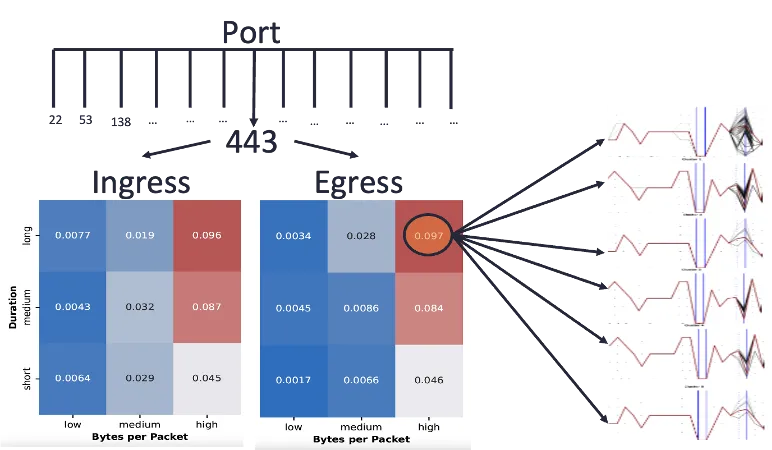

There are some strategies for quantifying similarity between logs with categorical variables. For instance, we may rely the variety of equivalently-valued fields between the 2 logs. Logs that share extra subject values in frequent are in some sense extra related. Now that we’ve some quantitative measure of distance, we will attempt unsupervised clustering to find pure clusters of logs throughout the information. We would hope that these clusters could be cyber-meaningful, reminiscent of grouping by the applying that generated every log, as Determine 2 depictes. Nonetheless, such cyber-meaningful groupings don’t happen in follow with out some cajoling, and that cajoling is an instance of cyber-informed machine studying: imparting our human cyber experience into the machine studying pipeline.

Determine 2: Optimistic illustration of clustering NetFlow logs

Determine 3 illustrates how we’d impart human data right into a machine studying pipeline. As a substitute of naively clustering all of the logs with none preprocessing, information scientists can elicit from cyber analysts the relationships they already know to exist within the information, in addition to the sorts of clusters they want to perceive higher. For instance, port, movement route, and packet volumetrics is likely to be of curiosity. In that case we’d pre-partition the logs by these fields, after which carry out clustering on the ensuing bins to grasp their composition.

Determine 3: Illustration of cyber-informed clustering

Whereas data-to-human is probably the most mature sort of explainability, we’ve mentioned among the challenges that cyber information current. Exacerbating these challenges is the big quantity of information that cyber processes generate. It’s subsequently essential for information scientists to interact cyber analysts and discover methods to impart their experience into the evaluation pipelines.

Mannequin-to-Human Explainability

Mannequin-to-human explainability seeks to reply: What’s my mannequin telling me and why? A typical SOC use case is knowing why an anomaly detector alerted to a specific occasion. To keep away from worsening the alert burden already dealing with SOC analysts, it’s important that ML methods deployed within the SOC embrace model-to-human explainability.

Demand for model-to-human explainability is growing as extra organizations deploy ML into manufacturing environments. The European Common Information Safety Regulation, the Nationwide Synthetic Intelligence Engineering initiative, and a extensively cited article in Nature Machine Intelligence all emphasize the significance of model-to-human explainability.

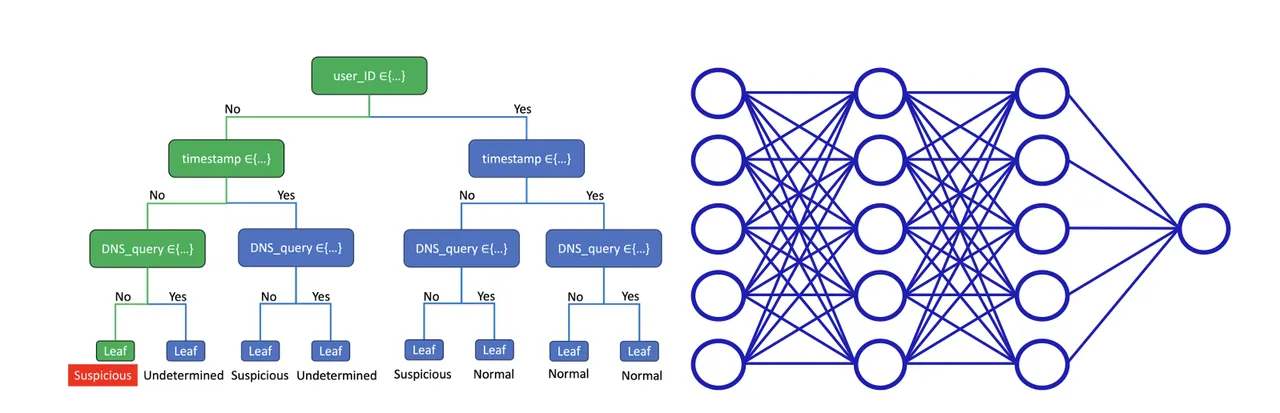

ML fashions will be labeled as white field or black field, relying on how readily their parameters will be inspected and interpreted. White field fashions will be totally interpretable, and the idea for his or her predictions will be understood exactly. Notice that even white field fashions can lack interpretability, particularly after they grow to be very massive. White field fashions embrace linear regression, logistic regression, resolution tree, and neighbor-based strategies (e.g., okay-nearest neighbor). Black field fashions usually are not interpretable, and the idea for his or her predictions have to be inferred not directly by means of strategies like analyzing international and native characteristic significance. Black field fashions embrace neural networks, ensemble strategies (e.g., random forest, isolation forest, XGBOOST), and kernel-based strategies (e.g., assist vector machine).

In our earlier weblog publish, we mentioned the choice tree for example of a white field predictive mannequin admitting a excessive diploma of model-to-human explainability; each prediction is totally interpretable. After a choice tree is skilled, its guidelines will be carried out straight into software program options with out having to make use of the ML mannequin object. These guidelines will be offered visually within the type of a tree (Determine 4, left panel), easing communication to non-technical stakeholders. Inspecting the tree supplies fast and intuitive insights into what options the mannequin estimates to be most predictive of the response.

Determine 4: White field resolution tree (left) and a black field neural community (proper)

Though complicated fashions like neural networks (Determine 4, proper panel) can extra precisely mannequin complicated methods, this isn’t at all times the case. For instance, a survey by Xin et al. compares the efficiency of varied mannequin varieties, developed by many researchers, throughout many benchmark cybersecurity datasets. This survey reveals that easy fashions like resolution bushes usually carry out equally to complicated fashions like neural networks. A tradeoff happens when complicated fashions outperform extra interpretable fashions: improved efficiency comes on the expense of diminished explainability. Nonetheless, the survey by Xin et al. reveals that the improved efficiency is commonly incremental, and in these instances we predict that system architects ought to favor the interpretable mannequin for the sake of model-to-human explainability.

Human-to-Mannequin Explainability

Human-to-model explainability seeks to allow end-users to affect an present skilled mannequin. Think about an SOC analyst wanting to inform the anomaly detection mannequin to not alert to a specific log sort anymore as a result of it’s benign. As a result of the top consumer is seldom a knowledge scientist, a key a part of human-to-model explainability is integrating changes right into a predictive mannequin primarily based on judgments made by SOC analysts. That is the least mature type of explainability and requires new analysis.

A easy instance is the encoding step of an ML pipeline. Recall that ML requires numerical options, however cyber information embrace many categorical options. Encoding is a way that transforms categorical into numerical options, and there are various generic encoding strategies. For instance, integer encoding may assign every IP handle to an arbitrary integer. This may be naïve, and a greater strategy could be to work with the SOC analyst to develop cyber-meaningful encoding methods. For instance, we’d group IP addresses into inside and exterior, by geographic area, or through the use of menace intelligence. By doing this, we impart cyber experience into the information science pipeline, and that is an instance of human-to-model explainability.

We take inspiration from a profitable motion referred to as physics-informed machine studying [Karniadakis et al. and Hao et al.], which is enabling ML for use in some engineering design purposes. In physics, we’ve governing equations that describe pure legal guidelines just like the conservation of mass and the conservation of vitality. Governing equations are encoded into fashions used for engineering evaluation. If we have been to train these fashions over a big design area, we may use the ensuing information (inputs mapped to outputs) to coach ML fashions. That is one instance of how our human experience in physics will be imparted into ML fashions.

In cybersecurity, we don’t have steady mathematical fashions of system and consumer conduct, however we do have sources of cyber experience. Now we have human cyber analysts with data, reasoning, and instinct constructed on expertise. We even have cyber analytics, that are encoded types of our human experience. Just like the physics neighborhood, cybersecurity wants strategies that allow our wealthy human experience to affect ML fashions that we use.

Suggestions for Cybersecurity Organizations Utilizing ML

We conclude with just a few sensible suggestions for cybersecurity organizations utilizing ML. Information-to-human explainability strategies are comparatively mature. Organizations looking for to be taught extra from their information can transition strategies from present analysis and off-the-shelf instruments into follow.

Mannequin-to-human explainability will be significantly improved by assigning, not less than within the early levels of adoption, a knowledge scientist to assist the ML end-users as questions come up. Growing cybersecurity information residents internally can be useful, and there are plentiful skilled growth alternatives to assist cyber professionals purchase these expertise. Lastly, end-users can inquire with their safety software program distributors as as to whether their ML instruments embrace varied sorts of explainability. ML fashions ought to not less than report characteristic significance—indicating which options of inputs are most influential to the mannequin’s predictions.

Whereas analysis is required to additional develop human-to-model explainability strategies in cybersecurity, there are just a few steps that may be taken now. Finish-users can inquire with their safety software program distributors as as to whether their ML instruments will be calibrated with human suggestions. SOCs may additionally contemplate gathering benign alerts dispositioned by handbook investigation right into a structured database for future mannequin calibration. Lastly, the act of retraining a mannequin is a type of calibration, and evaluating when and the way SOC fashions are retrained is usually a step towards influencing their efficiency.